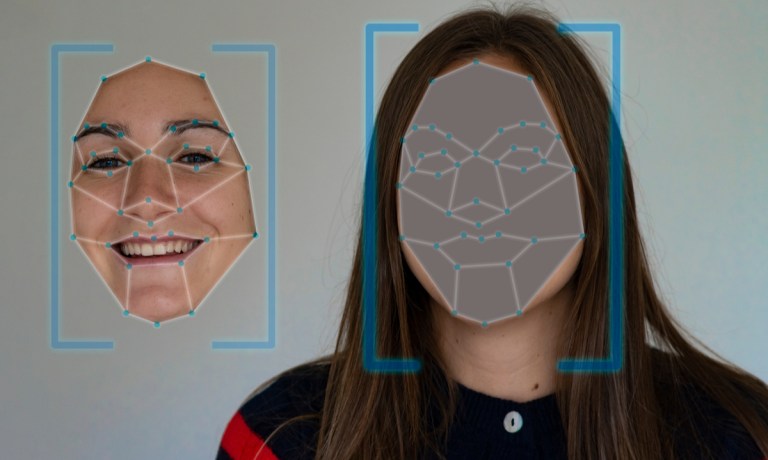

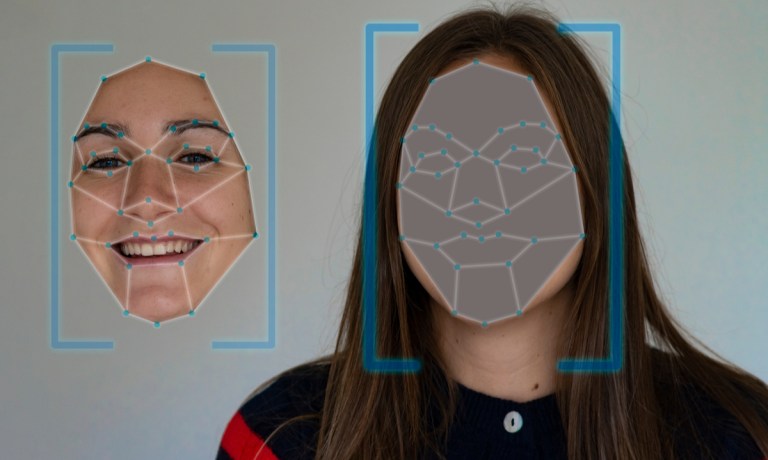

That’s because as the capabilities of generative artificial intelligence (AI) advance, the images and media that the models are able to produce are becoming more realistic, making it more and more challenging to discern what is real and what has been generated by AI.

In a bid to solve this problem, Adobe and other companies including Arm, Intel, Microsoft and Truepic have established a symbol that can be attached to content alongside metadata, listing its provenance, including whether it was made with AI tools.

The participating firms are founding members of the Coalition for Content Provenance and Authenticity (C2PA), a group whose goal is to create technical standards to certify the source and provenance of content, and which owns the trademark to the symbol.

The move comes as social media companies and other online platforms face increasing scrutiny and pressure around their ability to police and flag inappropriate uses of AI generated content, which for the most part continues to spread across the web unchecked.

On Tuesday (Oct. 10), the European Union (EU) sent a stern letter to Elon Musk, warning the tech billionaire about the spread of misinformation on his social media site X, formerly known as Twitter.

Advertisement: Scroll to Continue

If Musk fails to comply with the EU’s warnings, he is liable to pay a fine of up to 6% of X’s revenues, or face a total blackout across the 27-nation bloc.

Also on Tuesday, Meta’s Oversight Board announced it was opening a case to examine whether the social media giant’s existing guidelines on modified content could “withstand current and future challenges” posed by AI. The investigation is the first of its kind, and comes as U.S. lawmakers press the platform over its ability to contain misinformation in advance of the upcoming 2024 presidential election.

Read also: Generative AI Fabrications Are Already Spreading Misinformation

Tech Companies Face Growing Challenge

The investigation by Meta’s Oversight Board aims to dig into the company’s “human rights responsibilities when it comes to video content that has been altered to create a misleading impression of a public figure, and how they should be understood with developments in generative artificial intelligence in mind,” as well as the “challenges to and best practices in authenticating video content at scale, including by using automation.”

And as the increasingly multimodal capabilities of generative AI become more fine-tuned, allowing for the creation of hyper realistic images from just a few lines of text or less, interest in AI detection and regulation has intensified.

Pressure is coming both internally and externally. The White House has already gone on record saying that it wants Big Tech companies to disclose when content has been created using their AI tools, and the EU is also gearing up to require tech platforms to label their AI-generated images, audio and video with “prominent markings” disclosing their synthetic origins.

The only potential — if glaring — snarl is that that there doesn’t yet exist a truly foolproof method to detect and expose AI-generated content, according to PYMNTS Intelligence. That is why self-regulatory and proactive measures like the C2PA symbol are being promoted.

See also: Is It Real or Is It AI?

“As long as you can tell consumers what the content is made of, they can then choose to make decisions around that information based on what they see. But if you don’t give that to them, then it’s that shielding and blackboxing that the industry needs to be careful with, and where regulators can step in more aggressively if the industry fails to be proactive,” Shaunt Sarkissian, founder and CEO of AI-ID, told PYMNTS.

“The food industry was the first sector to really start adopting things like disclosure of ingredients and nutrition labels, providing consumers transparency and knowledge of what’s in their products — and with AI, it’s much of the same. Companies need to say, ‘Look, this was AI-generated, but this other piece was not,” Sarkissian added. “Get into the calorie count, if you will.

Still, things like nutrition labels are an imperfect solution to a complex problem — they require the user to opt in, whereas most of the threat from AI-generated deepfakes typically comes from less morally conscious users.

While responsible disclosure can create a more trustworthy information ecosystem where AI-generated content can thrive online, end users are still recommended to keep their wits about them until actual and accountable regulation of the technology is passed — whenever that may be.

For all PYMNTS AI coverage, subscribe to the daily AI Newsletter.