As generative artificial intelligence (AI) grows by leaps and bounds, countermeasures to keep tabs on artificially generated content are emerging. The challenge for companies developing these solutions is not only that their tools are new, unproven and offer mixed success but also that these tools’ capabilities will be increasingly stretched as AI-generated content grows and becomes more humanlike.

Leading AI developers and technology companies — including Amazon, Google, Meta, Microsoft and OpenAI — have made voluntary commitments to the Biden-Harris administration to ensure that their generative AI content tracker tools are effective. Whether those businesses honor their commitments, and to what degree their promises hold substance, remains to be seen.

Content scouts are AI tools themselves.

Generative AI tools use keywords related to a topic as input and scour the web for content with similar keywords. They then rewrite that content autonomously. This is where content scouts come in. These tools employ AI to analyze the text to detect certain patterns and characteristics indicative of AI-created content. No foolproof methods to detect and expose artificial content exist today. The current crop of content scouts — AI tools themselves — have a spotty track record in terms of accuracy and consistency. The reality is that there may never be a perfect tool, especially as AI-generated content becomes more sophisticated and humanlike.

Nevertheless, content scouts work to identify generative AI content based on clues in the text, such as a lack of typos or misspellings, information with no accompanying sources or shorter, flat-sounding sentences — all dead giveaways. Repeated words or phrases and the absence of analysis or commentary offer additional hints.

Will voluntary commitments be enough?

If history is any indication, the initial measures technology companies and regulators have taken may not be enough. The preventive measures that technology companies offer today, either with good intentions or to generate goodwill, may also be inadequate. This is partly because of commercial interests and partly because of unforeseen societal implications of generative AI.

Going forward, companies that use generative AI will draw scrutiny from regulators. Regulators are likely to cite concerns around consumer or public manipulation, the spread of misinformation or dissemination of harmful content. In response, companies leveraging generative AI will most likely emphasize the benefits of these technologies, downplay troubling scenarios as outliers and seek ways to circumvent regulation.

Cybersecurity dons a layer of AI.

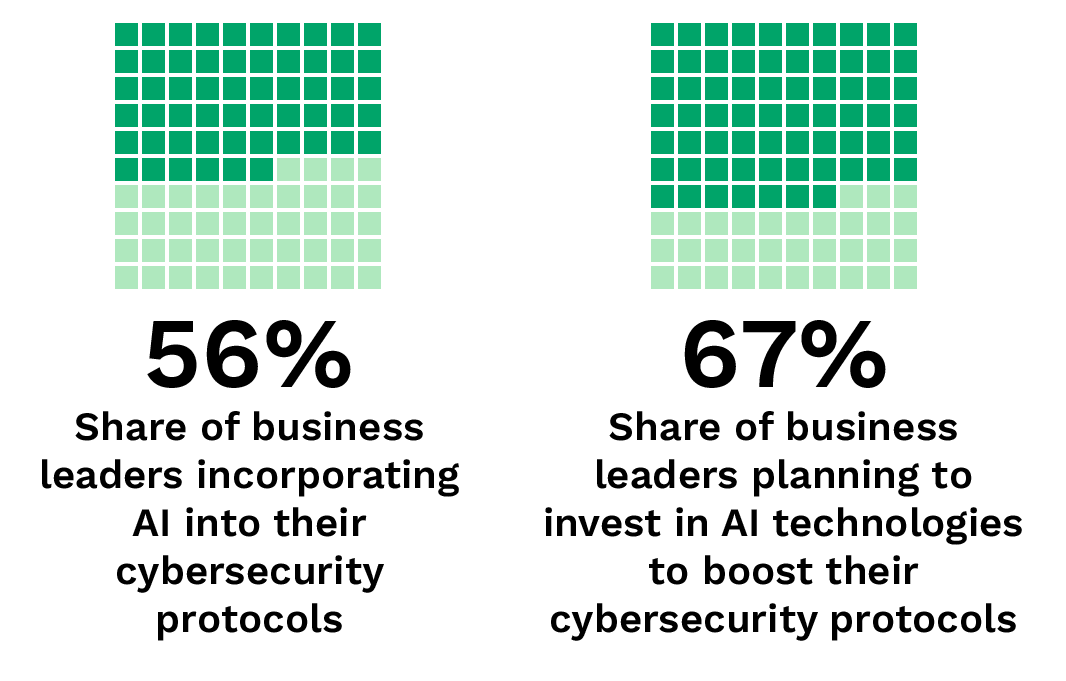

Cybersecurity breaches are a primary concern across many industries, prompting organizations to address the threat by enhancing their data security protocols to block unauthorized access and stop account takeovers. To this end, companies are using AI to integrate fraud monitoring, identity verification databases, data encryption, virtual private networks (VPNs) and antivirus software into their business systems and processes.

While the use of generative AI is currently in its early stages, it will not be long before AI companies incorporate generative AI capabilities into their solutions.

The repercussions of cybersecurity breaches for these industries include theft of trade secrets, leaks in confidential information and the proliferation of synthetic identities that can lead to the creation of fake accounts or takeover of valid, existing accounts. They can also hurt the company’s reputation and brand in the public eye.