We live during one of the most connected periods of human history.

And that is a trend that is only set to accelerate, particularly now that we are a full calendar year into the advent of generative artificial intelligence (AI).

After all, connection begins with — and is defined by — expression, and the expansive capabilities of multimodal AI when applied to voice recognition interfaces are already transforming the way individuals and businesses alike interact.

This, as the drumbeat of 2023’s voice AI announcements saw the intelligent conversational technology picked as the primary user interface of the world’s first purpose-built AI device, integrated into quick-service and fast-casual restaurants across America, and further fine-tuned as AI systems from Google, Meta, OpenAI, Anthropic and other industry players pushed forward with voice-driven interactions.

At the core of Voice AI is speech recognition, which involves converting spoken language into text. Rapid advances in neural networks and deep learning over the past year have significantly improved the accuracy of speech recognition systems, making their foundational algorithms more reliable and capable of interpreting and responding intuitively to spoken language in real-time with human-like acuity.

But despite its vast promise as a generalist solution, voice AI, to date, is still a nut waiting to be cracked.

Advertisement: Scroll to Continue

That’s because the way the human mind works is not the way that even the most advanced AI machines operate, and with artificial general intelligence (AGI) a far-away goal, today’s AI systems perform best when they are trained on and built atop domain-specific, localized data sets for purpose-designed tasks — like restaurant ordering from a preset menu, or ambient notetaking within a clinical healthcare setting.

Read more: How Consumers Want to Live in a Conversational Voice Economy

How Voice AI Will Augment Human Intelligence

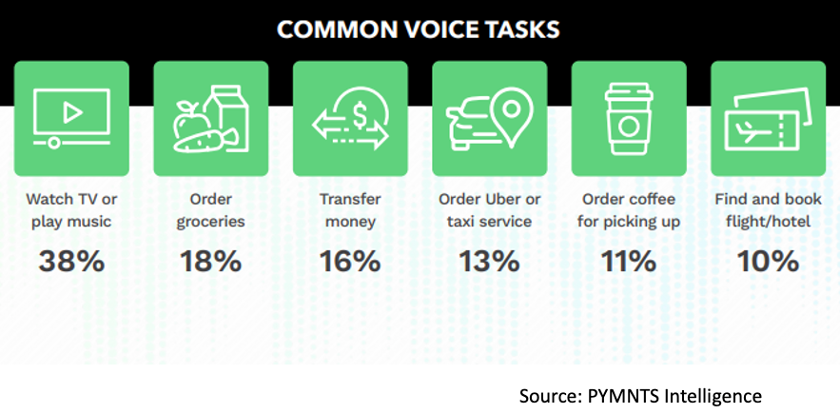

Voice is one of the more effortless ways for users to engage, and even transact, with AI platforms, and PYMNTS Intelligence finds that 86 million U.S. consumers now use voice assistants each month, while nearly 1 in 3 U.S. millennials already use a voice assistant to pay their bills.

But effortless doesn’t equate to flawless, and there are still some kinks to work out around voice AI beyond just conversational realism, including concerns about privacy, security, and the ethical use of voice data.

“[When voice AI first started,] consumers wanted to have those sci-fi-style, open-ended conversations [with robots], and many were disappointed because the tools at that time could only play music, set timers, tell you the weather,” Keyvan Mohajer, CEO and co-founder of conversational intelligence platform SoundHound, told PYMNTS, adding that these limited “utility occasions” had the unfortunate side effect of causing consumers to lower their expectations around voice AI applications.

PYMNTS Intelligence found that 63% of consumers say they would use voice technology if it were as capable as a person, 58% would use voice if it were easier and more convenient than doing tasks manually and 54% would also use it because it is faster than typing or using a touchscreen.

And as the evolution of voice AI comes to include more nuanced and context-aware interactions, those adoption inflection points will only become more compelling to digital-first consumers who increasingly expect that hands-free voice technologies will make their everyday routines intuitive, simple, and more connected.

See also: Gen AI Raises the Bar on Consumer Expectations for Smart Voice Assistants

Bringing Science Fiction Fantasies to Everyday Reality

“Computers can now behave like humans. They can articulate, they can write and can communicate just like a human can,” Beerud Sheth, CEO at Gupshup, told PYMNTS in an interview posted Nov. 7.

Already, companies like Microsoft and Meta are investing in text-to-speech AI technology that allows users to create talking avatars with text input and build real-time interactive bots using human images.

In the future, purpose-built AI bots may be tailored to specific categories and able to respond to voice prompts with their own conversational outputs.

White Castle’s vice president of marketing and public relations, Jamie Richardson, told PYMNTS in an interview posted Monday (Dec. 18) that he anticipates a surge in voice automation in restaurants in the year ahead, especially at the drive-thru.

Fraud Fears

However, hands-free voice technologies aren’t reserved solely for use by good actors and beneficial businesses.

While the rise of AI and a range of hyper-enabled chatbots and voice functions is changing the digital assistant game, it is also giving cyber fraud a shot in the arm.

That’s because generative voice AI is becoming worryingly good at cloning and generating voice outright — a highly effective way of duping both scam victims and potentially compromising biometric gateways.

The Federal Trade Commission (FTC) has taken a proactive stance in protecting consumers from the potential dangers of AI-enabled voice cloning technology, but the capability adds a new and threatening dimension to the cybercrime landscape.

As voice technologies continue to advance, the next step is going multilingual to expand access to the hands-free AI experience around the world.