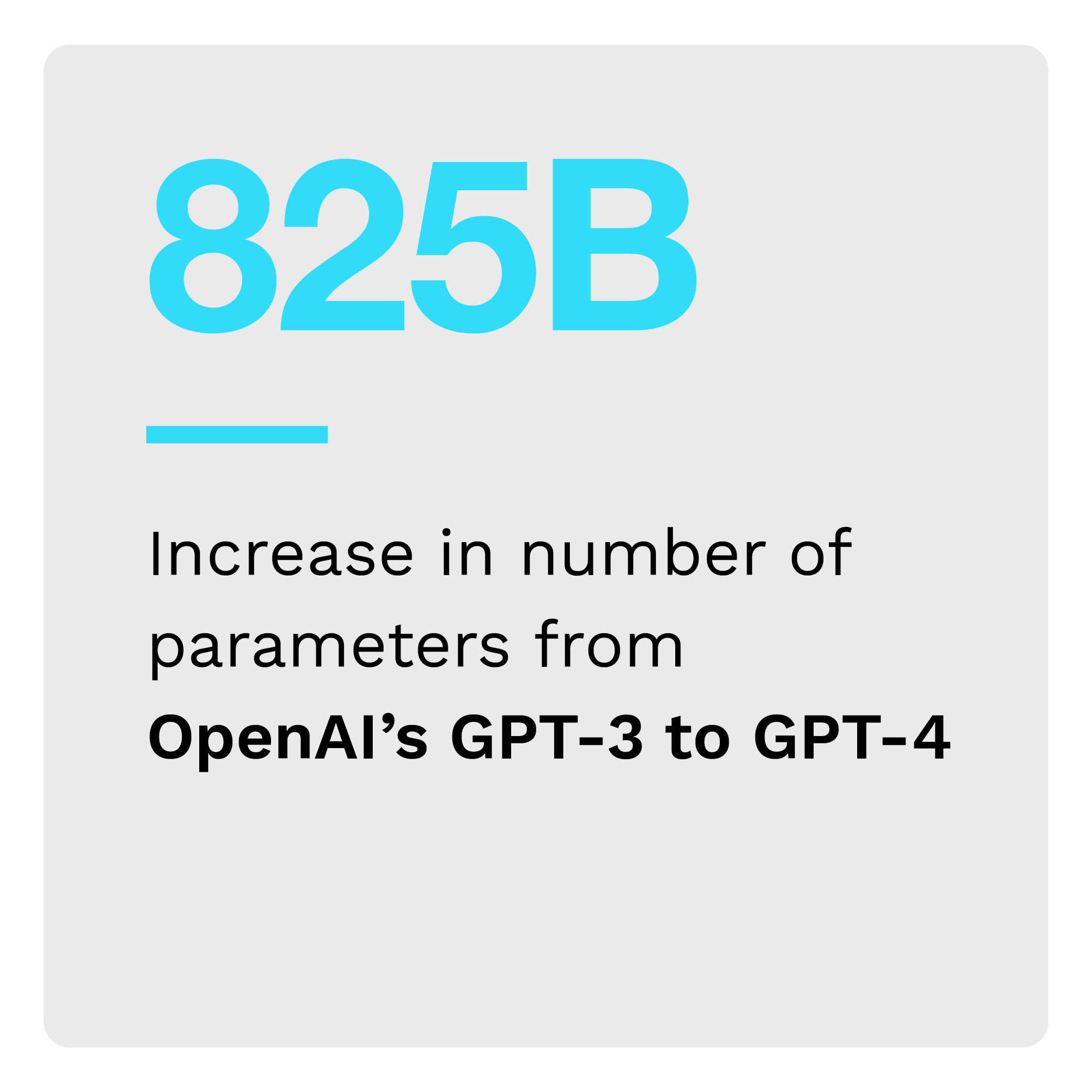

Large language models (LLMs) take generative artificial intelligence (AI) beyond text to include images, speech, video and even music. As they build, LLM creators will contend with the challenges of collecting and classifying vast amounts of data and understanding the intricacies of how models now operate and how that differs from the previous status quo.

Technology giants, including Alphabet and Microsoft, and investors, such as Fusion Fund and Scale VC, are making massive investments in LLMs. This task, however, is a big one. They must ensure their LLM products gather, train and fine-tune large data sets so that they generate the desired results.

The “Generative AI Tracker®” examines how creators are contending with costs and ethical considerations as LLMs take generative AI to a new level of sophistication and industry specificity.

LLMs to Upgrade Chatbots for Industry-Specific Use Cases

Eager to build on their earlier successes in AI, chatbots and data analytics, technology companies are harnessing the power of LMMs to bring new, more specialized generative AI products to life. Google just introduced PaLM 2, which powers its proprietary experimental and multilingual chatbot, Bard. Harvey, Casetext and LexisNexis all introduced LMM-based AI assistants to address legal matters. Meanwhile, Bloomberg is rolling out an LLM that draws from its vast trove of financial data. These innovations are just the beginning. Even more specialized LLM-driven AI products from dozens of other notable players are coming online.

To learn more, visit the Tracker’s Companies of Note section.

The Race Is on for LLM Dominance

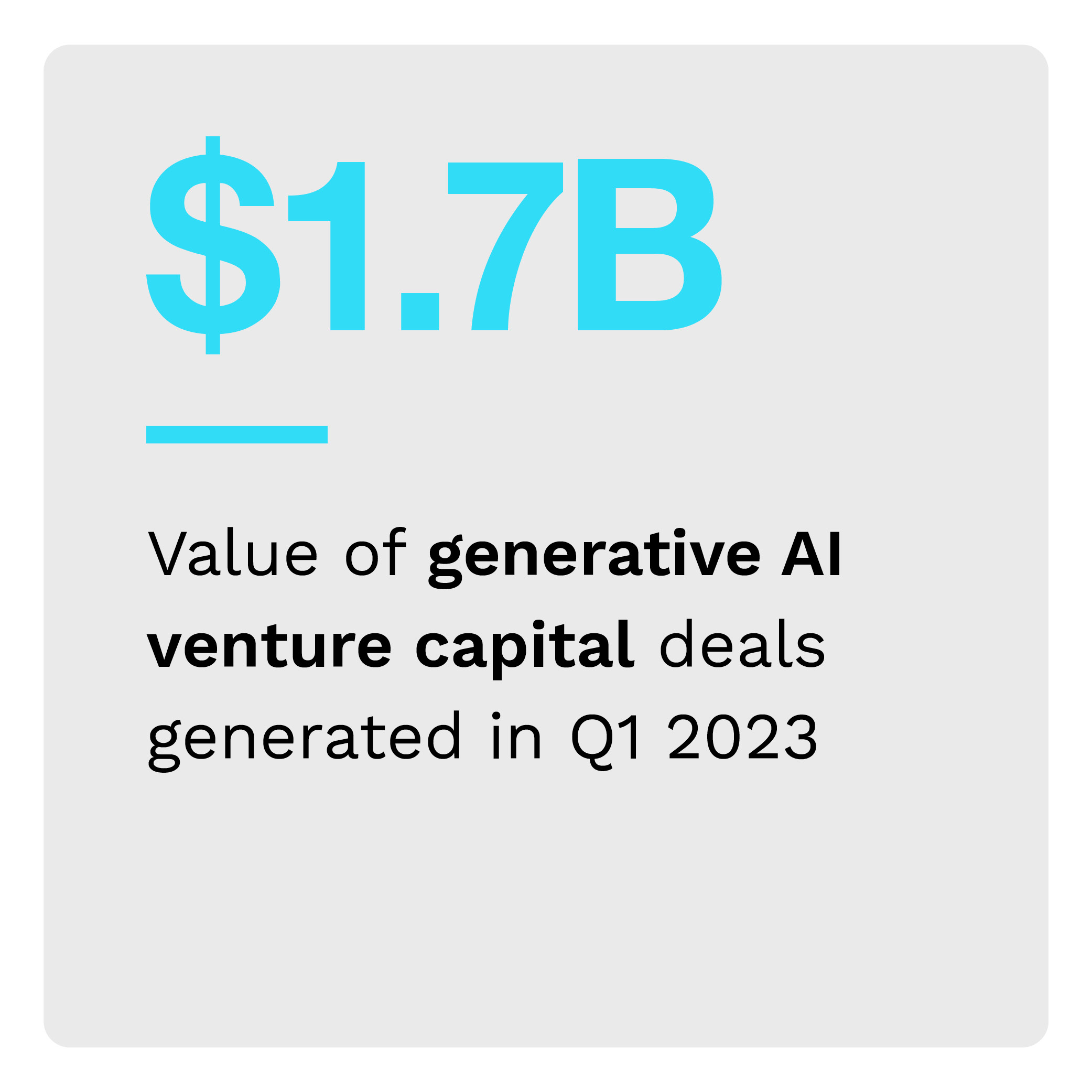

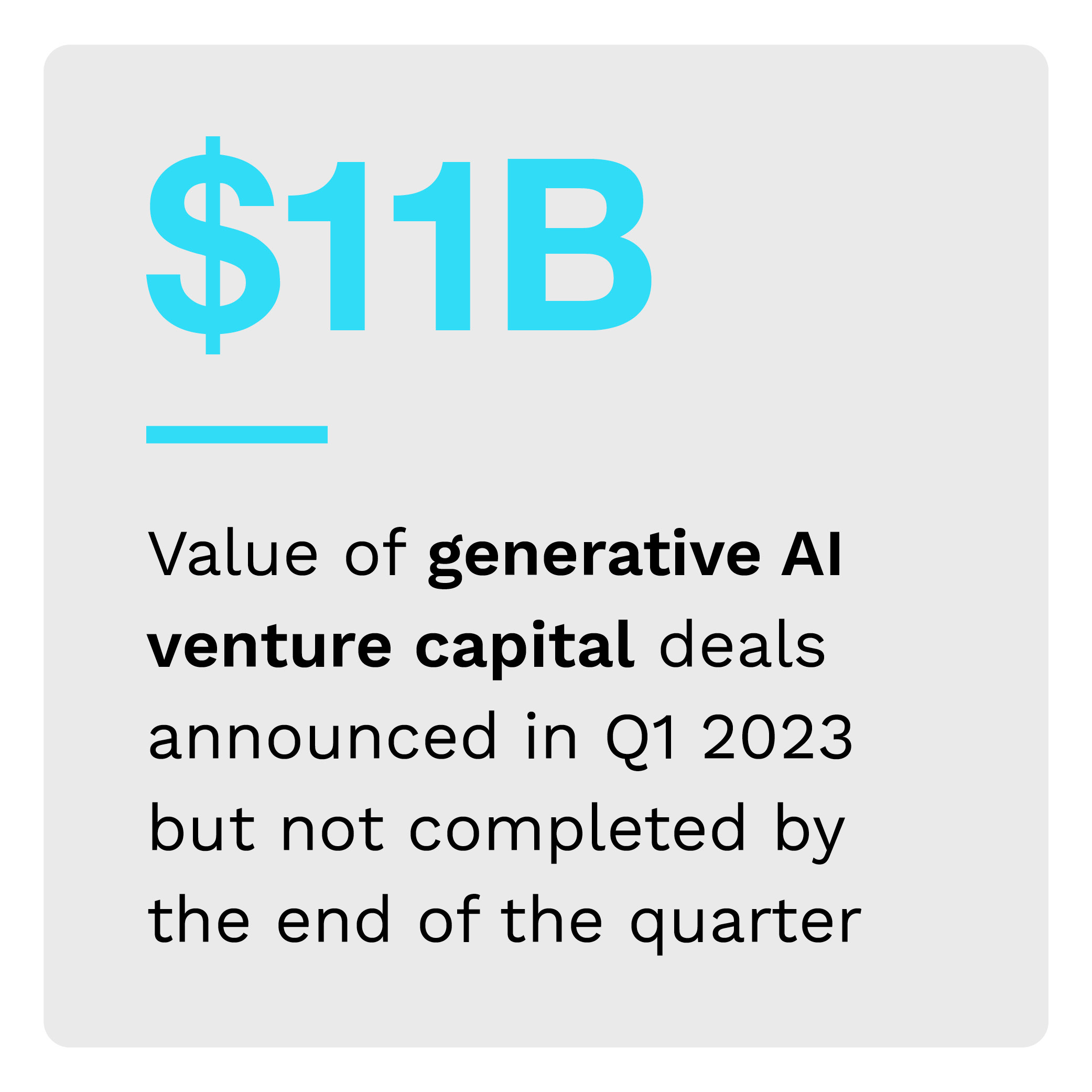

Technology companies are racing to establish LLMs and shape the next iteration of AI, and investors are cheering them on with massive cash infusions. Investors currently favor the mass appeal of open-source models such as NVIDIA’s NeMo. Still, their attention will gradually shift from open- versus closed-source models to those with varying parameter sizes and the ability to integrate with external tools and APIs. In this high-stakes pursuit for investment and market supremacy, industry players try to stay in step with regulators by committing to responsible and accountable practices to ensure data privacy and curb misinformation and ethics violations.

To learn more, visit the Tracker’s Innovation and Use Cases section.

Ethics, Privacy, Deepfakes and Bad Intentions Pose Challenges for LLMs

Because both open- and closed-source LLMs use proprietary data, both will face challenges. However, closed-source models will likely face heavier scrutiny and criticism as users, investors and regulators lack visibility into the underlying models, data sets and assumptions used. This lack of visibility can make it hard to spot privacy abuses, misinformation and bad intent. If false information spreads to unsuspecting audiences, the consequences can have lasting effects. Such power is difficult to police, judge or punish and requires industry partners to ensure accountability.

Because both open- and closed-source LLMs use proprietary data, both will face challenges. However, closed-source models will likely face heavier scrutiny and criticism as users, investors and regulators lack visibility into the underlying models, data sets and assumptions used. This lack of visibility can make it hard to spot privacy abuses, misinformation and bad intent. If false information spreads to unsuspecting audiences, the consequences can have lasting effects. Such power is difficult to police, judge or punish and requires industry partners to ensure accountability.

To learn more, visit the Tracker’s Issues and Challenges section.

About the Tracker

The “Generative AI Tracker®,” a collaboration with AI-ID, examines how creators are contending with costs and ethical considerations as LLMs take generative AI to a new level of sophistication and industry specificity.