Technology giants such as Alphabet and Microsoft and investors such as Fusion Fund and Scale VC are investing in LLMs and forming partnerships. The technology companies’ and investors’ task is a big one. It includes ensuring their LLM protégés gather and train large data sets, called parameters, and fine-tune them so that they execute and generate desired outputs or results.

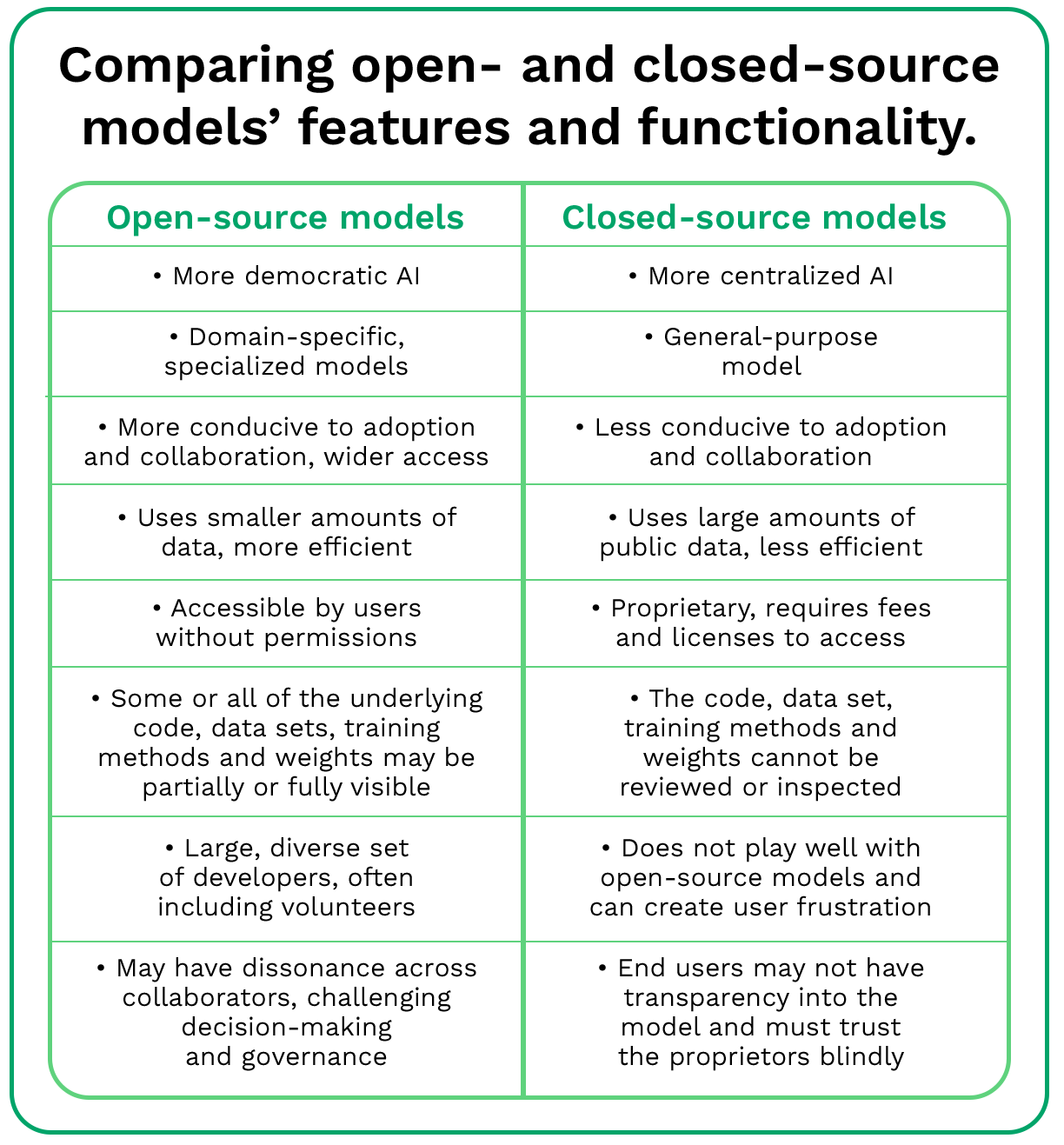

Understanding open- and closed-source models

Open-source models allow individuals or firms to access and modify the model’s design and code, permitting an open exchange of ideas as well as collaboration and transparency. Those who use open-source licenses to build products and services are expected not to charge for those services and to share the program’s source code, as in the web’s early days.

Closed-source models are considered proprietary intellectual property. As such, owners restrict the ability to view and modify the code. Usage of these models requires licenses and comes with restrictions on not changing the code. For these reasons, closed-source models tend to work better in general-purpose and consumer-oriented applications.

LLMs require effort to build and deploy

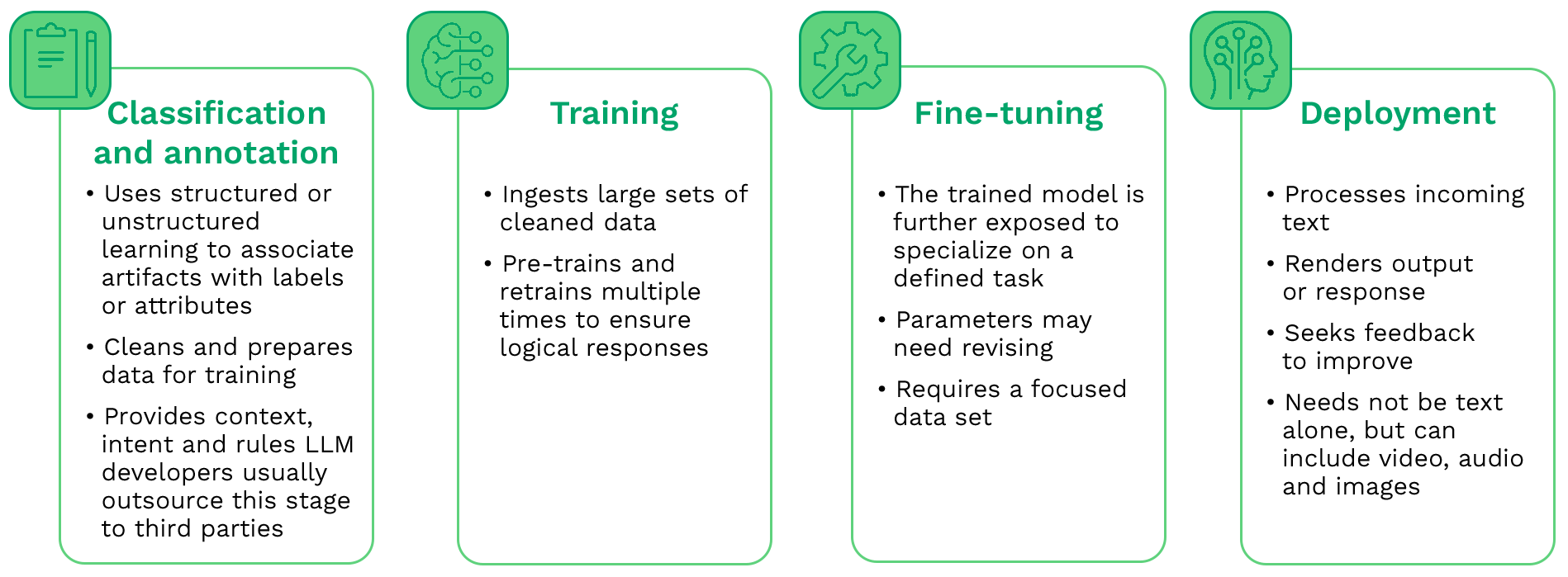

PYMNTS has simplified the detailed world of LLM development into several key categories. The first category — what we call classification and annotation — is about labeling data to assign meaning and imbue context. Training the data applies structured and unstructured learning to the data. This is followed by fine-tuning, in which the data is primed to generate specialized outputs.

Once ready, the model can be deployed to receive incoming queries or questions and generate contextually appropriate, relevant responses.

Classification and annotation make data usable. LLMs require data, classifications, context and process to fulfill their promise. Data in very large quantities is the main ingredient for LLMs. Richer data sets provide more material or input with which the model can train to learn how to generate a relevant response.

Data on its own is meaningless. To be useful to models, it needs to be sorted, labeled, measured, clustered and categorized in myriad different ways. If data is the raw material, classifications are its identifying labels. Classification and annotation can also imbue data with the right context and intent, conveying what a human means or intends to say, for example.

Steering these volumes of data through rule sets with correct context is a work in progress. The effort requires that the model reviews and connects the dots with whatever happened earlier or happens later in the chat or text.

Classification or annotation provides explanations or labels for each artifact in a data set later used to train LLMs. Training can be done through supervised learning (where the text is assigned a predefined class) or unsupervised learning (where it is grouped with similar text but with no instructions).

Training the data and then fine-tuning it is easier said than done. LLMs ingest large sets of clean data but require long periods of time — from three hours to 45 days, depending on the size of the data set — to pre-train and retrain to generate logical, contextual and accurate responses. Fine-tuning the model is far from automatic and takes considerable time.

A pre-trained LLM is ready to be fine-tuned: specialized for a defined, specific task. This may require that model parameters be revised. If the model needs to use tools or services accessible via an application programming interface (API), the training will need to account for that as well.

Deployment is the moment of truth. Once trained, a model is ready for deployment. This is where the rubber meets the road: LLMs receive and process incoming text and render an output or response. Instead of conveying the automated, canned responses typical of chatbots, LLMs can wow audiences with audio and video, and even music, as audiences can see the image of a human moving their mouth to speak and make sounds while making sensible, logical conversation.

It is a bonus if the pre-trained models have a feedback loop that allows them to improve with each iteration. This, however, is a nice-to-have option rather than table stakes.

The (winning) case for open source

Open-source models are popular because they are easily accessible and free — or have free versions, often with newer parameters. Access may be open, but the underlying tools, code, algorithm logic and infrastructure may not be. Most likely, open-source models’ owners may make a base model — with limited parameters — available to users, reserving the right to charge for a version with greater parameters. After all, larger numbers of parameters generate a higher cost of training.

Closed-source models are proprietary, with owners restricting the ability to view and modify the code. Their usage usage requires licenses, and users must trust the model owners’ integrity.

![]()

![]() Large language models (LLMs) are lifting artificial intelligence (AI) to new heights by expanding its capabilities beyond text to include images, speech, video and even music. As they build, companies developing LLMs will contend with the challenges of collecting and classifying large amounts of data — as well as understanding the intricacies of how models now operate and how that differs from the previous status quo.

Large language models (LLMs) are lifting artificial intelligence (AI) to new heights by expanding its capabilities beyond text to include images, speech, video and even music. As they build, companies developing LLMs will contend with the challenges of collecting and classifying large amounts of data — as well as understanding the intricacies of how models now operate and how that differs from the previous status quo.